Painless Data Augmentation with BigQuery

Quickly Augmenting Your Datasets with BigQuery Public Data

Google Cloud's BigQuery is a great tool for data scientists to quickly and easily augment their datasets with external data. Specifically, BigQuery has a listing of public datasets from a variety of different sources. All you need is a Google Cloud account and some basic SQL knowledge.

Here are just a few useful public datasets:

- US Census American Community Survey

- COVID-19 Data from Google

- NOAA Global Weather Data

- Public Domain Artworks from The Met

- Bitcoin Transaction Data

A Quick Example

I think one of the most useful among the BigQuery public datasets is the US Census ACS data, which gives multi-year data broken down geographically (by state, zip code, county, etc.).

It has a lot of great demographic information like population (broken down by age, race, gender, marital status, etc.), education levels, employment, income, and much more.

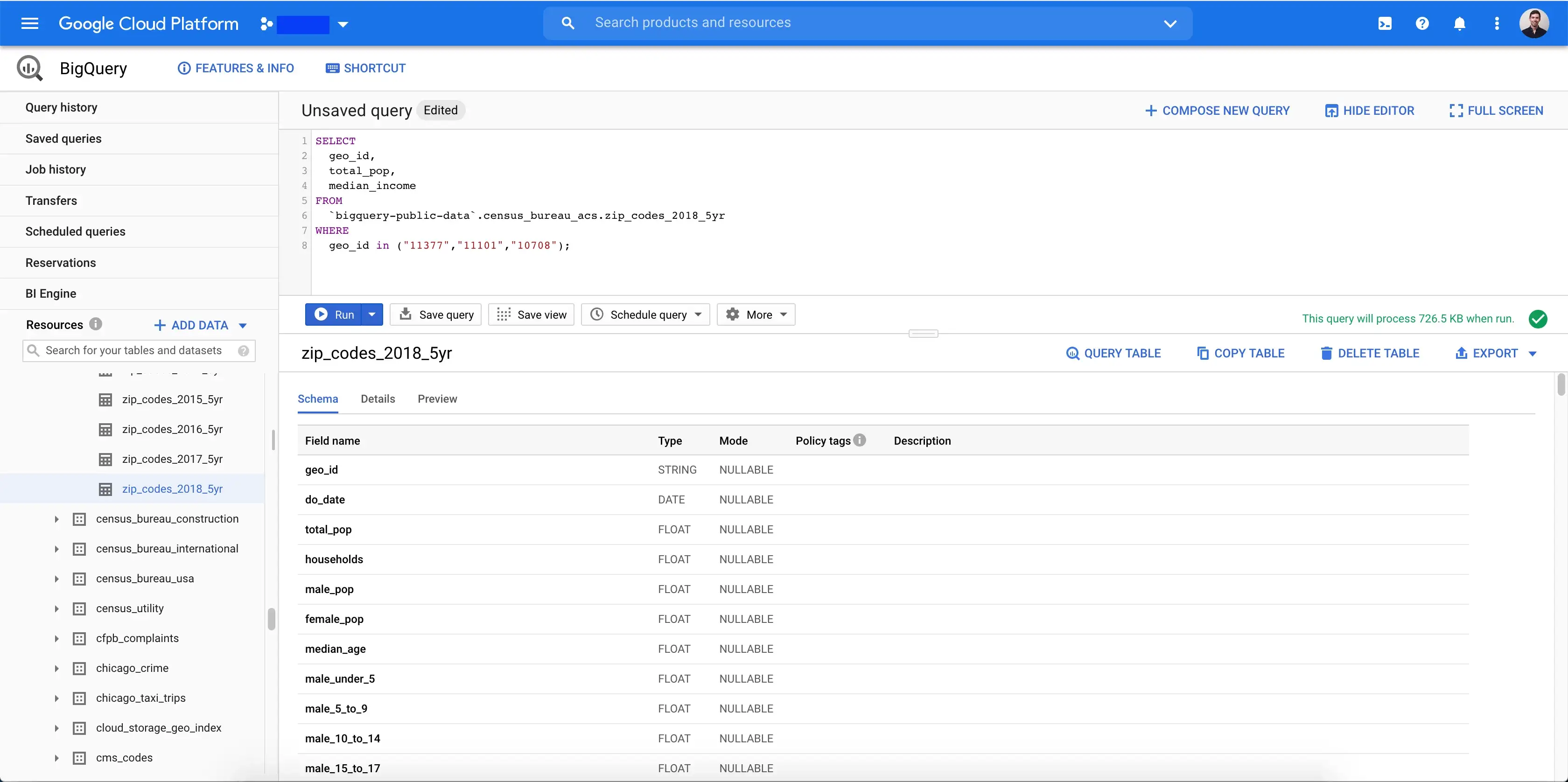

For example, say I wanted to query the total population and median household income for three zip codes in the NYC area. There's a table called zip_codes_2018_5yr that gives a 5-year estimate of census data for the year 2018, broken down by zip code.

Here's what my query will look like:

SELECT

geo_id, -- Where geo_id is the zip code

total_pop,

median_income

FROM

`bigquery-public-data`.census_bureau_acs.zip_codes_2018_5yr

WHERE

geo_id in ("11377","11101","10708");

And I can run it in the BigQuery UI...

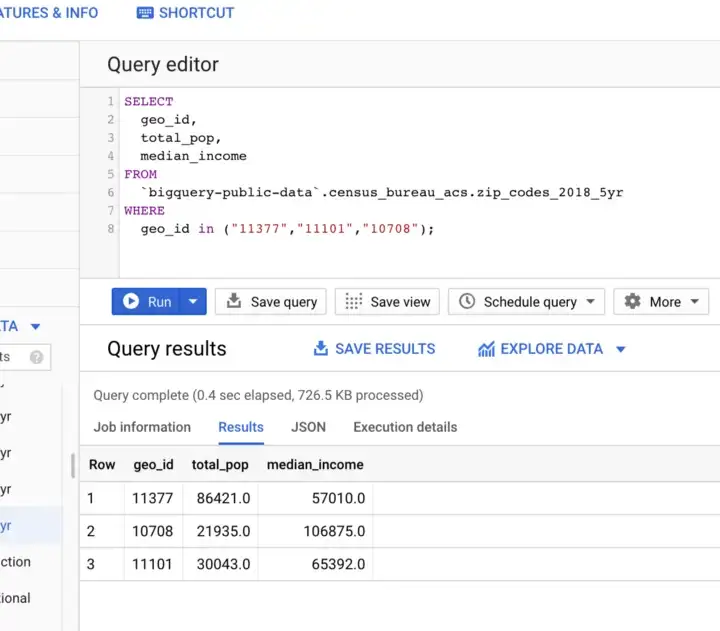

And get the following results...

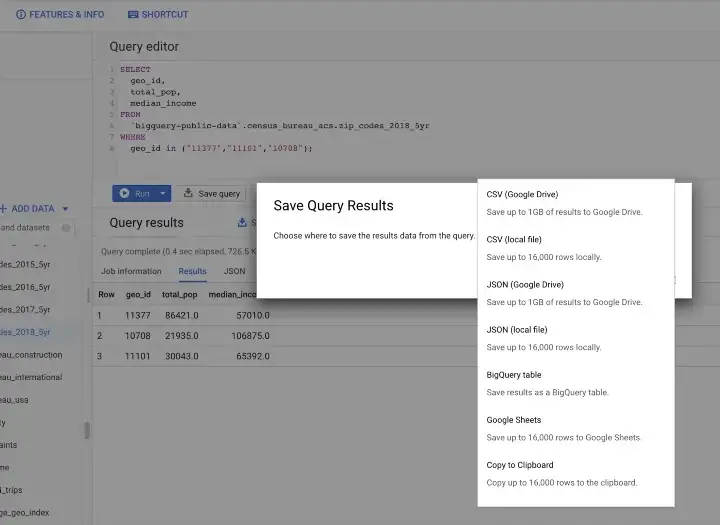

Great! I got my answer in 0.4 seconds and now I can go back and expand my query to get this data for multiple years. Or, I can export the results to a CSV or JSON file to join it up with my data.

Finally, as a bonus, you can connect to BigQuery through Python with the package google-cloud-bigquery.